By Jim O’Neal

Lyndon Baines Johnson was born in August 1908 in the Texas Hill Country nearly 112 years ago. (Tempus does fugit!). He shined shoes and picked cotton for pocket money, graduating from high school at age 15. Both his parents were teachers and encouraged the reading habits that would benefit him greatly for the rest of his life.

Tired of both books and study, he bummed his way to Southern California, where he picked peaches, washed dishes and did other odd jobs like a common hobo. The deep farm recession forced him back to Texas, where he borrowed $75 to earn a teaching degree from a small state college. Working with poor, impoverished Mexican children gave him a unique insight into poverty. He loved to tell stories from that time in his life, especially when he was working on legislation that improved life for common people.

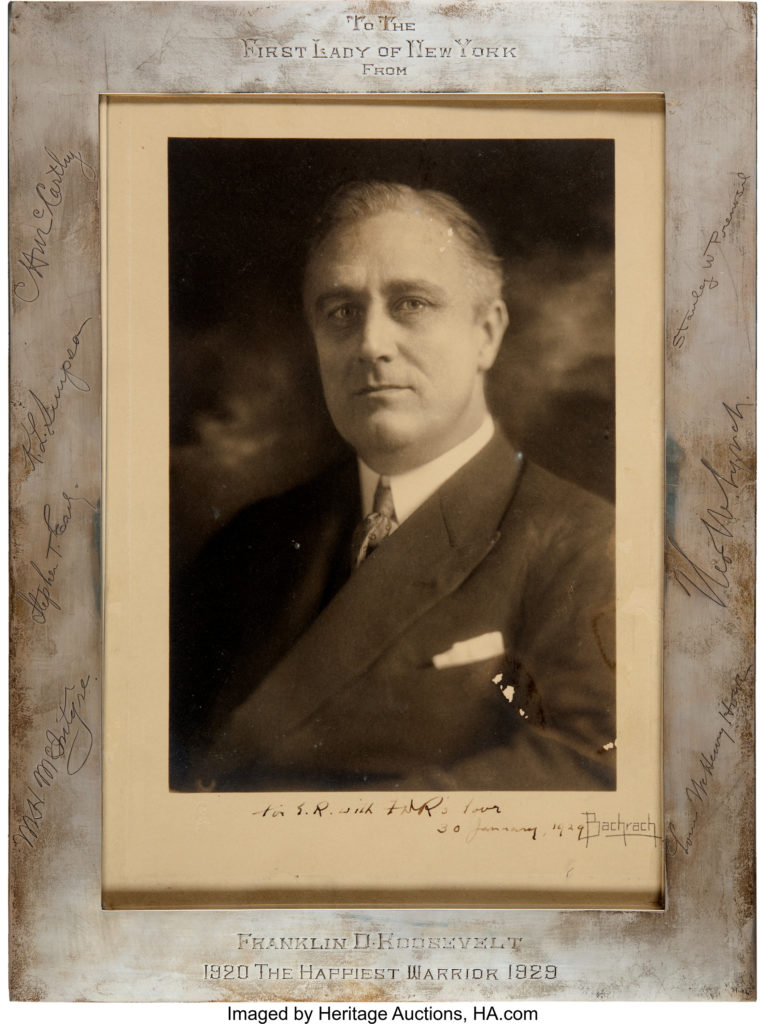

His real power was developed when he electrified the rural Hill Country by creating a pool of money from power companies that he doled out to politicians all over the country who needed campaign funds and were willing to barter their votes in Congress. The women and girls who lived in Texas were known as “bent women” from toting water – two buckets at a time from water wells – to their homes. Having electricity to draw the water eliminated a generation of women who were not hump-backed. They said of LBJ, “He brought us light.” This caught FDR’s attention and lead to important committee assignments.

He married 20-year-old Claudia Alta Taylor in 1934 (at birth, a nanny had exclaimed “She looks just like a “little lady bird”). A full-grown Lady Bird parlayed a small inheritance into an investment in an Austin radio station that grew into a multimillion-dollar fortune.

Robert Caro has written about LBJ’s ambition, decisiveness and willingness to work hard. But how does that explain the trepidation to run for president in 1960? He had been Senate Majorly Leader, accumulated lots of political support and had a growing reputation for his Civil Rights record. He even told his associates, “I am destined to be president. I was meant to be president. And I’m going to be president!” Yet in 1958, when he was almost perfectly positioned to make his move, he was silent.

His close friend, Texas Governor John Connally, had a theory: “He was afraid of failing.”

His father was a fair politician but failed, lost the family ranch, plunged into bankruptcy and was the butt of town jokes. In simple terms, LBJ was afraid to run for the candidacy and lose. That explains why he didn’t announce until it was too late and JFK had it sewed up.

Fear of failure.

After JFK won the 1960 nomination at the Democratic National Convention in Los Angeles, he knew LBJ would be a valuable vice president on the Democratic ticket against Richard Nixon. Johnson’s Southwestern drawl expanded the base and the 50 electoral votes in Texas was too tempting to pass up. They were all staying at the Biltmore Hotel in L.A. and were a mere two floors away. Kennedy personally convinced LBJ to accept, despite brother Bobby’s three attempts to get him to decline (obviously unsuccessful).

The 1960 election was incredibly close with only 100,000 votes separating Kennedy and Nixon. Insiders were sure that a recount would uncover corruption in Illinois and Nixon would be declared the winner. But in a big surprise, RMN refused to demand a recount to avoid the massive disruption in the country. (Forty years later, Gore vs. Bush demonstrated the chaos in the 2000 Florida “hanging chads” debacle and the stain on SCOTUS by stopping just the Florida recount).

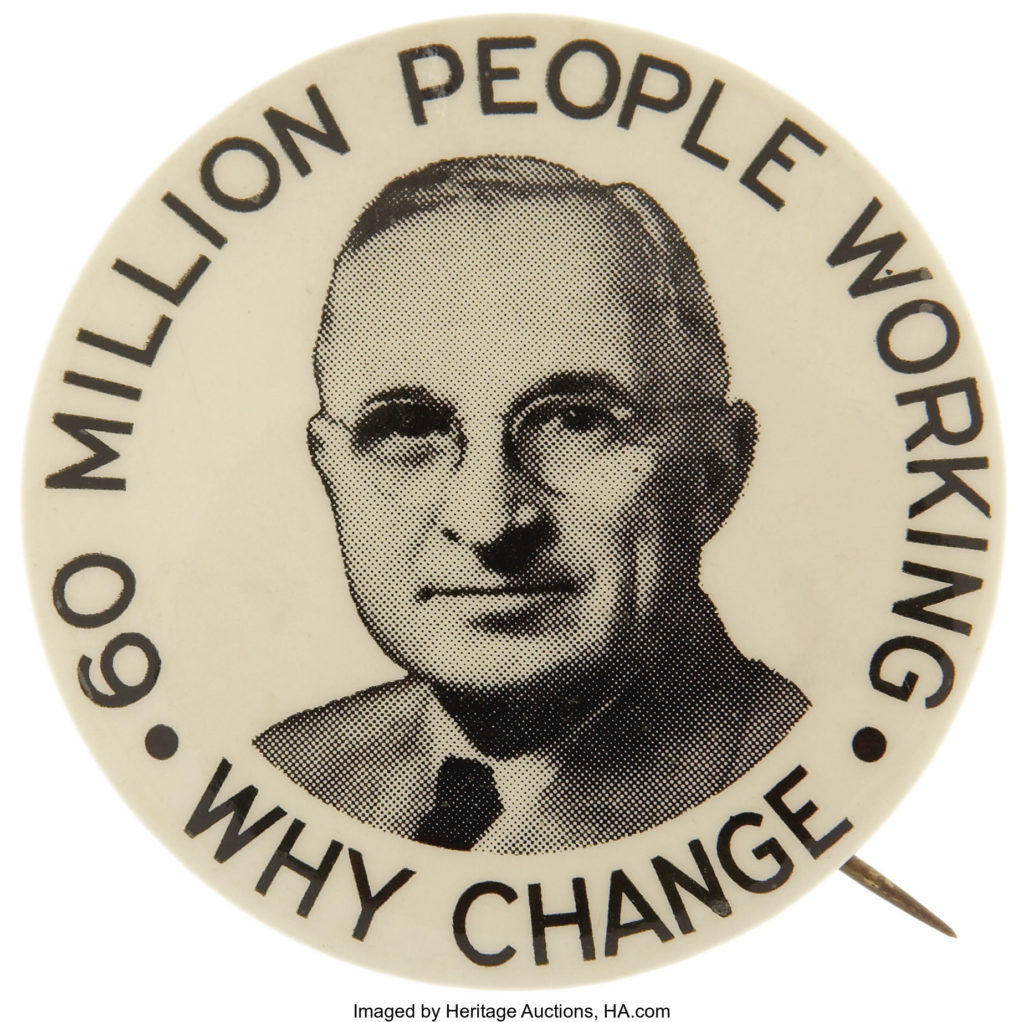

After the Kennedy assassination in November 1963, LBJ was despondent since he was sure he’d become the “accidental president.” But, when he demolished Barry Goldwater in 1968 the old Lyndon was back. The Johnson-Humphrey ticket won by of the greatest landslides in American history. LBJ got 61.1 percent of the popular vote and 486 electoral votes to Goldwater’s 52. More importantly, Democrats increased their majorities in both houses of Congress.

This level of domination provided LBJ with the leverage to implement his full Great Society agenda with the help of the 89th Congress, which approved multibillion-dollar budgets. After LBJ ramrodded through Congress his liberal legislative programs in 1965-66, it seemed that he might go down in history as one of the nation’s truly great presidents. But, his failure to bring Vietnam to a successful conclusion, the riots in scores of cities in 1967-68, and the spirit of discontent that descended on the country turned his administration into a disaster.

On Jan. 22, 1973, less than a month after President Truman died, the 64-year-old Johnson died of a heart attack. His fear of failure, a silent companion.

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].