By Jim O’Neal

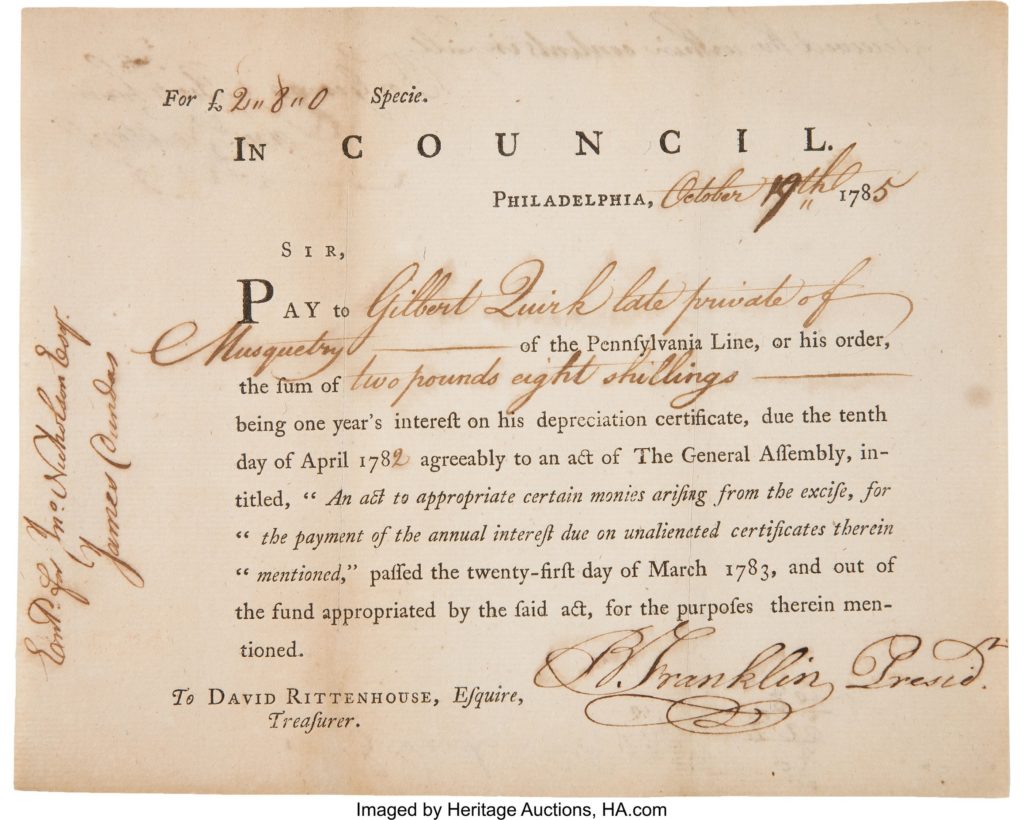

Typically, any discussion of Ben Franklin will eventually include the question of why he was never president of the United States. He certainly earned the title as “The First American,” as well as an astonishing reputation as a leading politician, diplomat, scientist, printer, inventor, statesman and brilliant polymath. Biographer Walter Isaacson said it best: “Franklin was the most accomplished American of his age and the most influential in shaping the type of society America would become.”

He was certainly there in Philadelphia on May 5, 1775, representing Pennsylvania as a delegate to the Second Continental Congress, even as skirmishes escalated with the British military. The following June, he was an influential member of the Committee of Five that drafted the Declaration of Independence. In fact, he was the only one of the Founding Fathers to sign the four most important documents: the Declaration of Independence; the 1778 Treaty of Alliance with France (ensuring they would not get involved militarily in the war); the Treaty of Paris (1783), ending the war with Great Britain; and the historic United States Constitution in 1787, which is still firmly the foundation of the nation.

He proved to be a savvy businessman who made a fortune as a printer and prolific scientist who is still revered. His success provided him the personal freedom to spend time in England and France trading ideas with the great minds in the world and enjoying the company of the socially elite.

He was also a shrewd administrator and had a unique talent for writing or making insightful observations on all aspects of life. The only significant issue that seemed to perplex him were the waves of German immigrants that flooded many parts of Pennsylvania. In his opinion, the Crown dumped too many felons that resulted in unsafe cities. They could not speak English and they imported books in German. They erected street signs in German and made no attempts to integrate into the great “melting pot.” Worse was the fact that in many places they represented one-third of the population. He suggested that we export one rattlesnake for every immigrant and void every deed or contract that was not in English. Although the Pennsylvania Dutch (Germans) were an imbalance in the 18th century, by the 1850s they were ideal for the western expansion and proved to be ideal as agrarians.

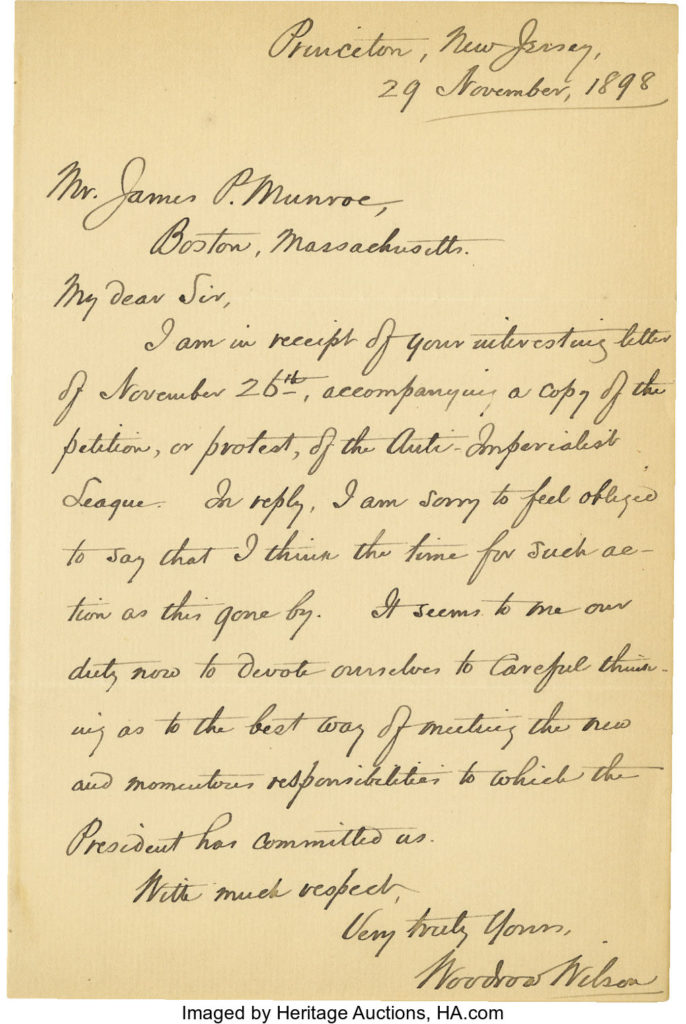

When World War I erupted in 1914, most Americans viewed it with a sense of detachment and considered it just another European conflict. As a nation of immigrants focused on improving their personal lives, there was little time to root for their homeland. This policy helped Woodrow Wilson win a tight reelection in 1916 and it would last for two years. Since the start of war in Europe fit neatly with the end of the 1913-1914 recession in America, it was a perfect economic fit.

American exports to belligerent nations began rising rapidly; 1913, $825 million – 1917, $2.25 billion. In addition to steel, arms and food, American banks made large loans to finance these supplies. Inevitably, the U.S. was drawn into the war as German submarines sank supply boats. Germany then attempted to bribe Mexico to attack America (the XYZ Affair), and finally lit the fire keg by sinking the Lusitania, which carried a healthy complement of American passengers. Wilson was forced to ask Congress to declare war.

After we were provoked into the largest and deadliest war the world had seen, Wilson then decided that all Americans would be expected to support the war effort. The federal government opened its first propaganda bureau … the “Committee on Public Information.” Thus the creation of the first true “fake news.” Most forums of dissent were banned and it was even unlawful to advocate pacifism. Yes, German-Americans experienced substantial repression as war hysteria rippled through the system. But nothing close to what Japanese-Americans suffered after Japan attacked Pearl Harbor.

On Feb. 12, 1942, President Franklin Delano Roosevelt issued Executive Order 9066, which authorized federal officials to round up Japanese-Americans, including U.S. citizens, and remove them from the Pacific Coast. By June, 120,000 Americans of Japanese descent in California, Oregon and Washington were ordered to assembly centers like fairgrounds and racetracks … with barbed wire … and then shipped to permanent internment camps. Then, astonishingly, they were asked to sign up for military service, and some males 18-45 did since they were subject to the draft.

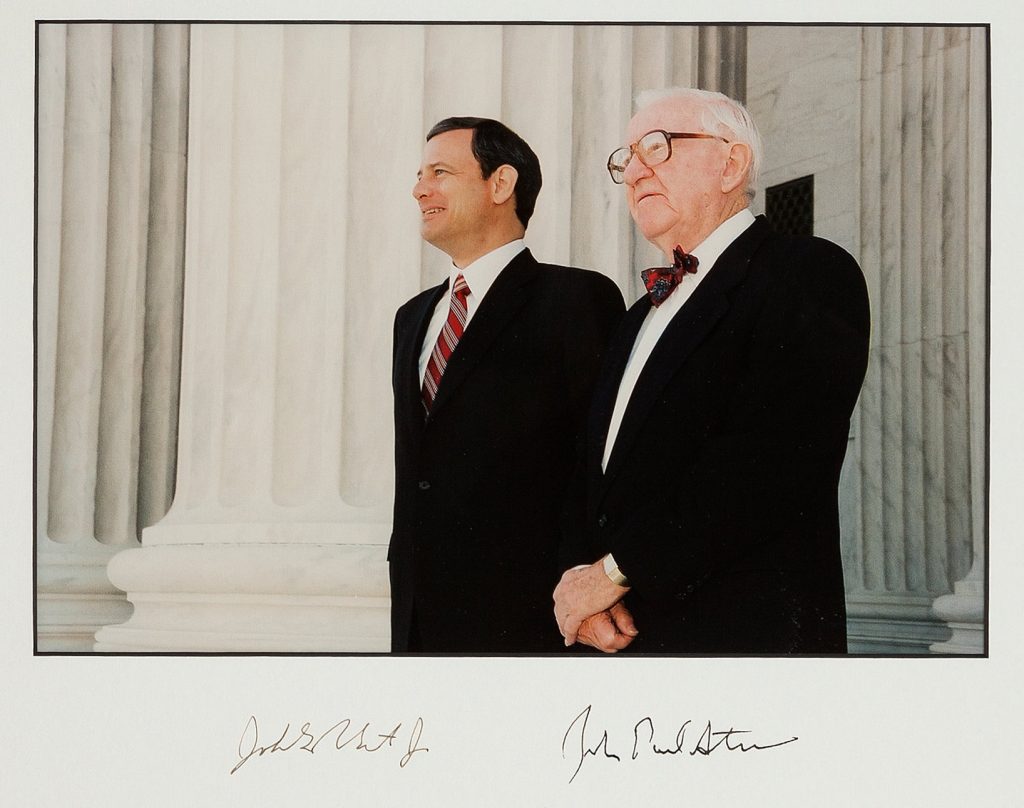

The U.S. Supreme Court heard three separate cases on the constitutionality and the court decided it was a wartime necessity.

In 1988, President Ronald Reagan signed the Civil Liberties Act and President George H.W. Bush signed letters of apology and payment of $20,000 to heirs. A total of 82,219 Japanese-Americans eventually received $1.6 billion in reparations.

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].