By Jim O’Neal

Constitutional scholars are prone to claim there is a direct and historic link between the First Commandment of the Old Testament and the First Amendment of the U.S. Constitution … that one leads inexorably to the other. The First Commandment explains the origins of freedom and the First Amendment defines the scope of freedom in broad categories.

Both point unmistakably to freedom as America’s greatest contribution to humanity. Not the automobile, jazz or Hollywood. Not the word processor, the internet or the latest smartphones. All of these are often described as America’s unique assets, but it is the awesome concept of “freedom” that is America’s ultimate symbol, attraction … and even export!

The First Commandment reads, “I am the Lord thy God, who brought thee forth out of Egypt, out of the house of bondage, and thou shalt have no other gods before me.” In this way, God sanctions an escape from bondage and puts people on a path toward the “promised land,” toward freedom. This powerful message ricocheted through history until it finally found a permanent home in Colonial America.

In the early 18th century, the trustees of Yale, many of them scholars who read scripture in the original Hebrew, designed a coat of arms for the college in the shape of a book open to two words, Urim and Thummim, which have come to mean “Light” and “Truth” in English. The book depicted, of course, was the bible.

Not too far north, Harvard graduates had to master three languages … Latin, Greek and Hebrew. True gentlemen in those days had to have more than a passing familiarity with the Old Testament. It was not a mere coincidence that carved into the Liberty Bell in Philadelphia, relaying a brave message to a people overthrowing British rule, was an uplifting phrase selected from chapter 25 of Leviticus: “Proclaim liberty throughout the land, unto all inhabitants thereof.”

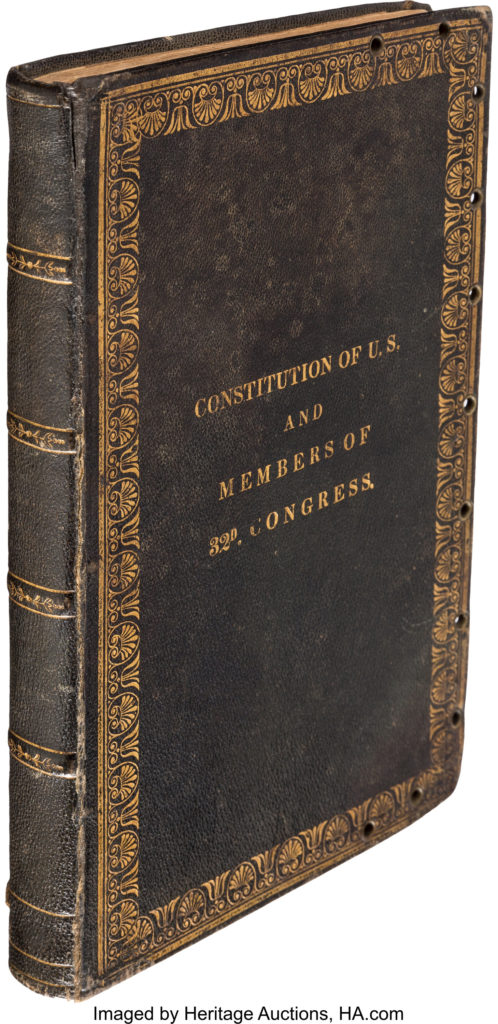

The Commandment that blessed an escape from oppression and embraced the pursuit of freedom led the Founding Fathers to pen the Bill of Rights. They had much on their minds, many interests to try and reconcile, but they agreed that the delineation of freedom was to be their primary responsibility. It was to become the First Amendment to the recently ratified Constitution, inspired in part by the First Commandment, and it read, “Congress shall make no law respecting an establishment of religion or prohibiting an establishment of religion, or prohibiting the free exercise thereof, or abridging the freedom or speech, or of the press, or the right of the people peaceably to assemble, and to petition the government for a redress of grievances.”

Throughout American history, these freedoms have become intertwined with American life, one indistinguishable from the other. Just consider that in one small grouping of words, into a single Amendment, resides more freedom for mankind than had ever existed in the history of the world. Somewhat remarkable, in my opinion, yet we take it for granted today and can only fine tune minor opinions on original intent in small insignificant instances.

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].