By Jim O’Neal

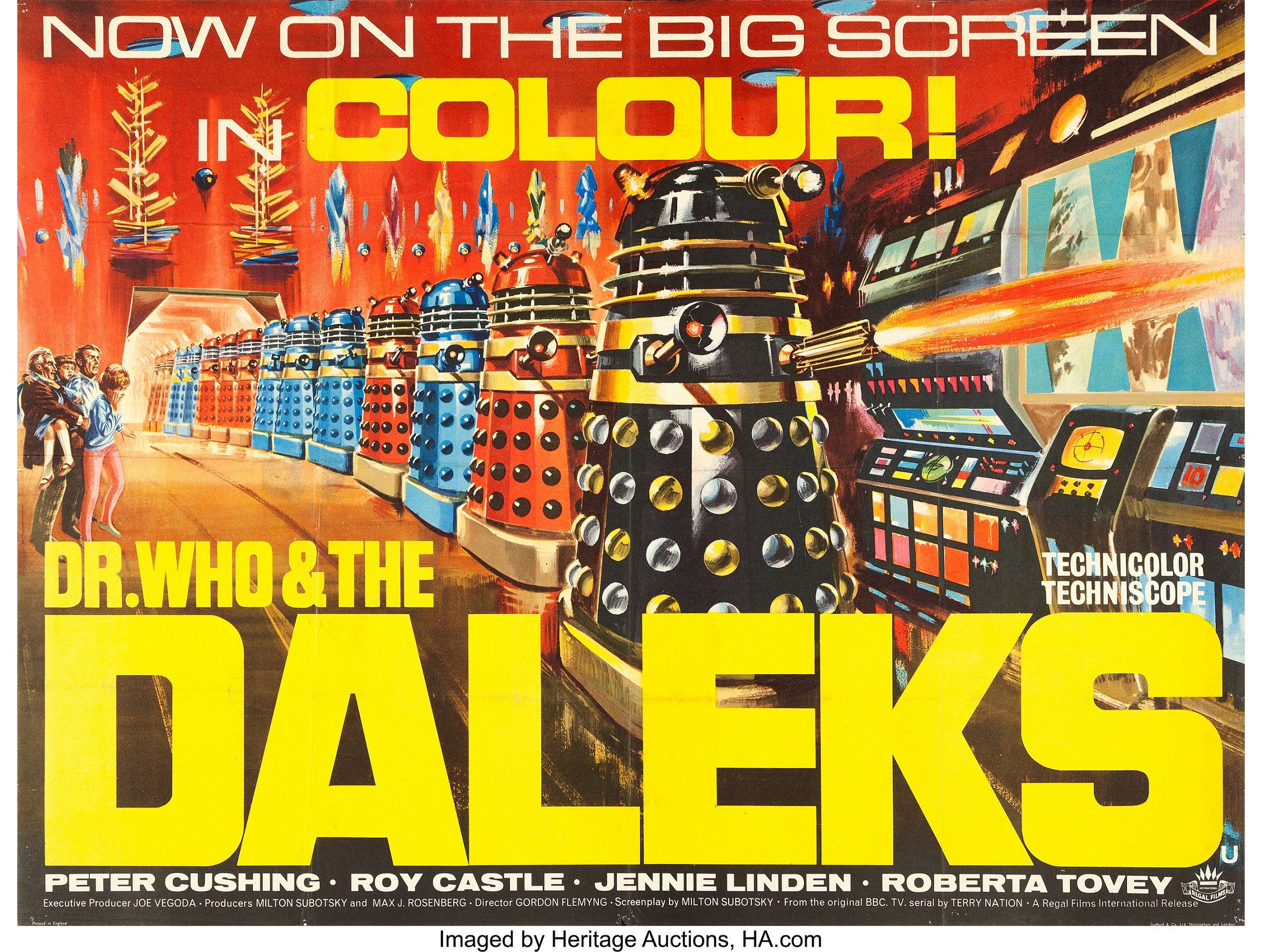

Doctor Who was a popular sci-fi TV series in Britain that originally ran from 1963-89 on BBC. Myth has it that the first episode was delayed for 80 seconds due to an announcement of President Kennedy’s assassination in Dallas. We had the opportunity to watch a 1996 made-for-TV movie in London that co-starred Eric Roberts (Julia’s older brother). Alas, it failed to generate enough interest to revive the original Doctor Who series (at least until a new version was launched in 2005).

A 1982 episode from the first run of the show is still popular since the story claimed that aliens were responsible for the Great Fire of London of 1666 and mentioned Pudding Lane. Ever curious, I drove to Pudding Lane, a rather small London street, where Thomas Farriner’s bakery started the Great Fire on Sunday, Sept. 2, shortly after midnight, and then proceeded to rain terror down on one of the world’s great cities.

Pudding Lane also holds the distinction of being one of the first one-way streets in the world. Built in 1617 to alleviate congestion, it reminds one just how long Central London has been struggling with this issue that plagues every large city. Across from the bakery site is a famous landmark monument built in memory of the Great Fire. Not surprisingly, it was designed by the remarkable Sir Christopher Wren (1632-1723).

Wren is an acclaimed architect (perhaps the finest in history) who helped rebuild London with the help of King Charles II. This was no trivial task since 80 percent of the city was destroyed, including many churches, most public buildings and private homes … up to 80,000 people were rendered homeless. Even more shocking is that this disaster followed closely the Great Plague of 1665, when as many as 100,000 people died. A few experts have suggested that the 1666 fire and massive refurbishment helped the disease-ridden city by eliminating the vermin still infesting parts of London.

One of Wren’s more famous restorations is St. Paul’s Cathedral, perhaps the most famous and recognizable sight in London yet today. Many high-profile events have been held there, including the funerals of Prime Minister Winston Churchill and Margaret Thatcher, jubilee celebrations for Queen Victoria and Queen Elizabeth II, and the wedding of Prince Charles and Lady Diana … among many others.

Even Wren’s tomb is in St. Paul’s Cathedral. It is truly a magnificent sight to view Wren’s epitaph:

“Here in its foundations lies the architect of this church and city, Christopher Wren, who lived beyond ninety years, not for his own profit but for the public good. Reader, if you seek his monument – look around you. Died 25 Feb. 1723, age 91.”

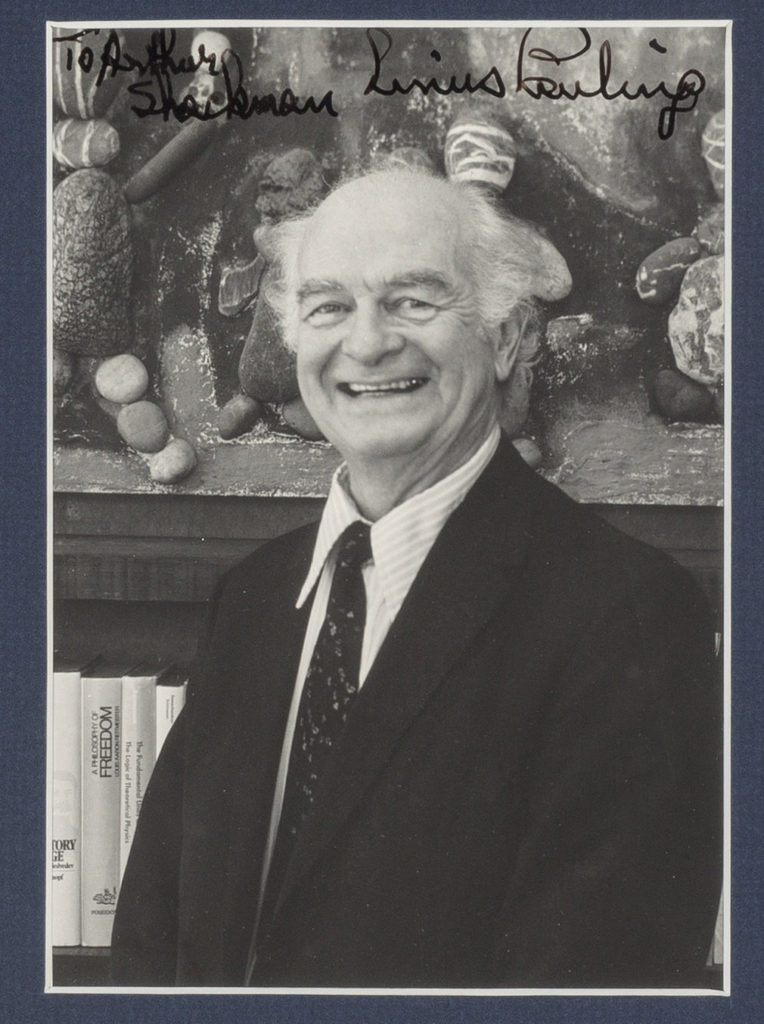

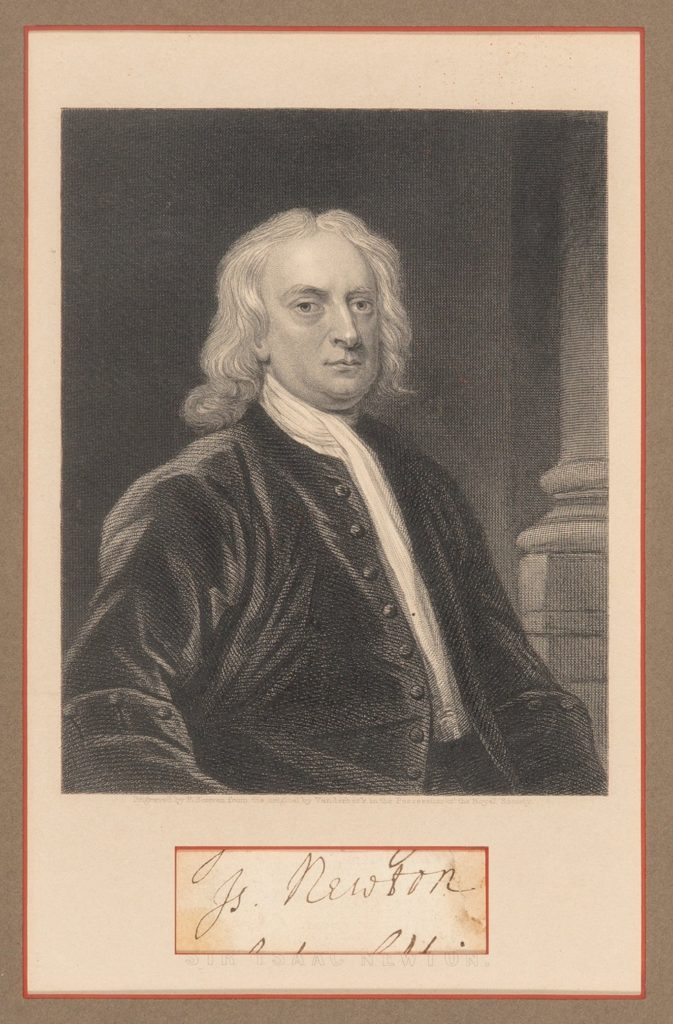

In addition to Wren’s reputation as an architect, he was renowned for his astounding work as an astronomer, a co-founder of the elite Royal Society, where he discussed anything scientific with Sir Isaac Newton, Blaise Pascal, Robert Hooke and, importantly, Edmond Halley of comet fame. Halley’s Comet is the only known short-period comet that is regularly (75-76 years) visible to the naked eye. It last appeared in our solar system in 1986 and will return in mid-2061.

Samuel Langhorne Clemens (aka Mark Twain) was born shortly after the appearance of Halley’s Comet in 1835 and predicted he “would go out with it.” He died the day after the comet made its closest approach to earth in 1910 … presumably to pick up another passenger. We all know about Twain, Tom Sawyer and Huckleberry Finn. But far fewer know about his unique relationship with Helen Keller (1880-1968). She was a mere 14 when she met the world-famous Twain in 1894.

They became close friends and he arranged for her to go to Radcliffe College of Harvard University. She graduated in 1904 as the first deaf and blind person in the world to earn a Bachelor of Arts degree. She learned to read English, French, Latin and German in braille. Her friend Twain called her “one of the two most remarkable people in the 19th century.” Curiously, the other candidate was Napoleon.

I share his admiration for Helen Keller.

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].