By Jim O’Neal

When Ho Chi Minh lived in London, training as a pastry chef under Auguste Escoffier at the Carlson House, he used it as a pillow. Fidel Castro claimed he read 370 pages (about half) in 1953 while he was in prison after a failed revolutionary attack of the barracks of Moncada in Santiago de Cuba. President Xi Jinping of China hailed its author as “the greatest thinker of modern times.”

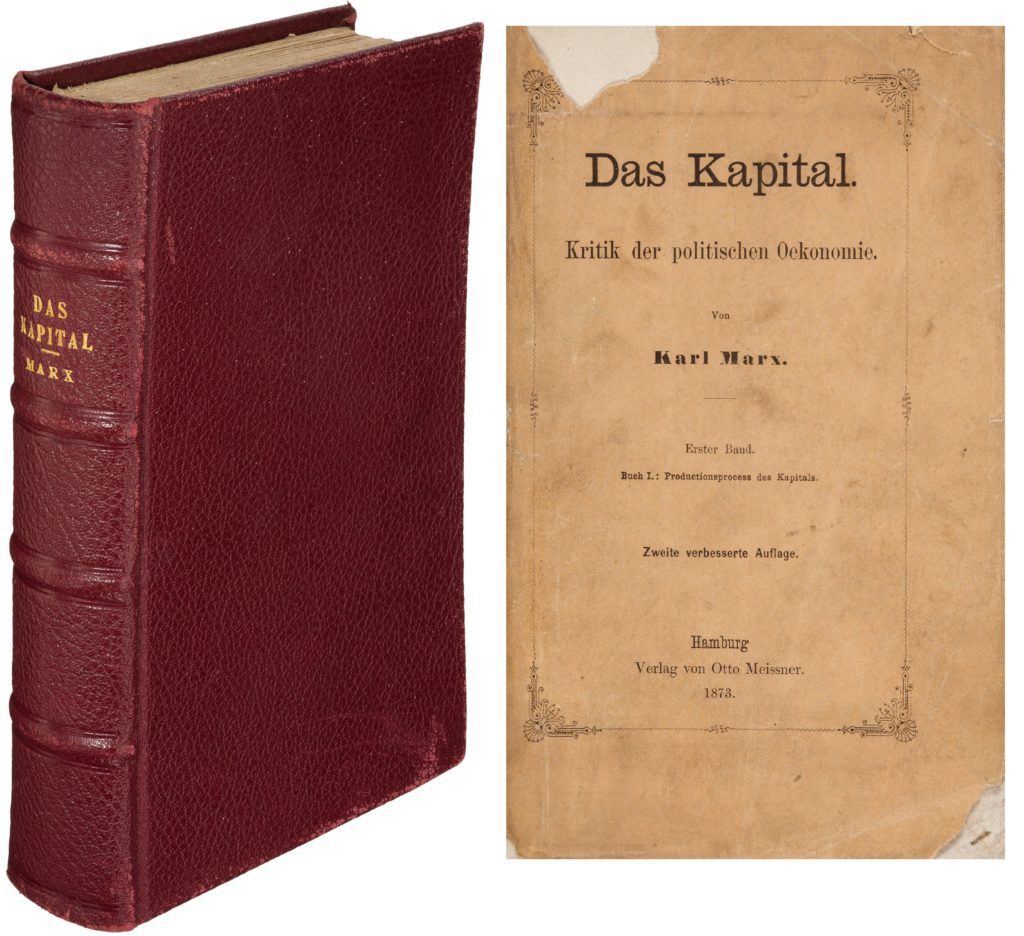

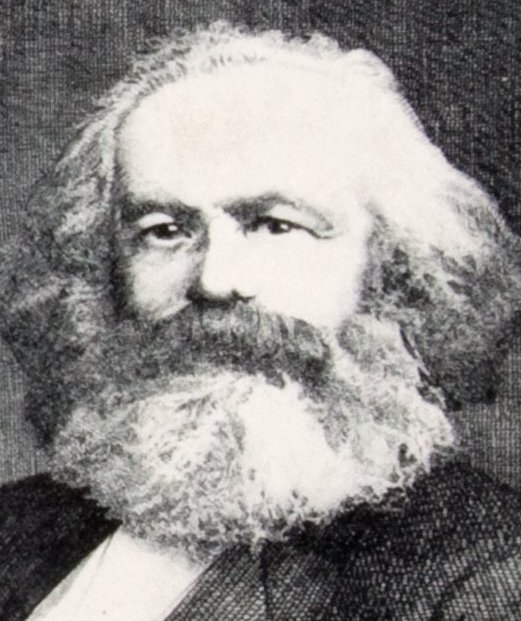

It’s been 200 years since Karl Marx was born on May 5, 1818, in Trier, Germany. His book Das Kapital was published in 1867, or at least that was when Volume 1 made its way into print. His friend and benefactor Friedrich Engels edited Volumes 2 and 3 after Marx’s death.

Engels (1820-1895) was born in Prussia, dropped out of high school and finally made it to England to help run his father’s textile factory in Manchester. On his trip, he met Marx for the first time, but it would be later before their friendship blossomed. Perhaps it was due to Engels’ 1845 book The Condition of the Working Class in England.

He had observed the slums of Manchester, the horrors of child labor, and the utter impoverishment of laborers in general and the environmental squalor that was so pervasive. This was not a new indictment since Thomas Robert Malthus (1766-1834) had written, albeit anonymously, about these abysmal conditions. However, he had blamed the poor for their plight and opposed the concept of relief “since it simply increases their tendency to idleness.” He was particularly harsh on the Irish, writing that a “great part of the population should be swept from the soil.”

Not surprisingly, mortality rates soared, especially for the poor, and the average life expectancy fell to an astonishing 18.5 years. These lifespan levels had not existed since the Bronze Age and even in the healthiest areas, life expectancy was in the mid-20s, and nowhere in Britain exceeded 30 years.

Life expectancy had largely been uncertain until Edmond (the Comet) Halley obtained a cache of records from an area in Poland in 1693. Ever the tireless investigator of any and all scientific data, he suddenly realized he could calculate the life expectancy of any person still alive. From these unusually complete data charts, he created the very first actuarial tables. In addition to all the many other uses, this is what enabled the creation of the life insurance industry as a viable service.

One of the few who sympathized with the poor was the aforementioned Friedrich Engels, who spent his time embezzling funds from the family business to support his collaborator Karl Marx. They both passionately blamed the industrial revolution and capitalism for the miserable conditions of the working class. While diligently writing about the evils of capitalism, both men lived comfortably from the benefits it provided them personally. To label them as hypocrites would be far too mild a rebuke.

There was a stable of fine horses, weekends spent fox hunting, slurping the finest wines, a handy mistress, and membership in the elite Albert Club. Marx was an unabashed fraud, denouncing the bourgeoisie while living in excess with his aristocratic wife and his two daughters in private schools. In a supreme act of deception, he accepted a job in 1851 as a foreign correspondent for Horace Greeley’s New-York Tribune. Due to his poor English, he had Engles write the articles and he cashed the checks.

Even then, Marx’s extravagant lifestyle couldn’t be maintained and he convinced Engels to pilfer money from his father’s business. They were partners in crime while denouncing capitalism at every opportunity.

In the 20th century, Eugene Victor Debs ran for U.S. president five consecutive times as the candidate of the Socialist Party of America, the last time (1920) from a prison cell in Atlanta while serving time after being found guilty of 10 counts of sedition. His 1926 obituary told of him having a copy of Das Kapital and “the prisoner Debs read it slowly, eagerly, ravenously.”

In the 21st century, Senator Bernie Sanders of Vermont ran for president in 2016, despite the overwhelming odds at a Democratic National Convention that used superdelegates to select his Democratic opponent. In a series of televised debates, he predictably promised free healthcare for all, a living wage for underpaid workers, college tuition and other “free stuff.” I suspect he will be back in 2020 due to overwhelming support from Millennials, who seem to like the idea of “free stuff,” but he may have 10 to 20 other presidential hopefuls who’ve noticed that energy and enthusiasm.

One thing: You cannot call Senator Sanders a hypocrite like Karl Marx. In 1979, Sanders produced a documentary about Eugene Debs and hung his portrait in the Burlington, Vt., City Hall, when he became its mayor after running as a Socialist.

As British Prime Minister Margaret Thatcher once said: “The problem with Socialism is that eventually you run out of other people’s money.”

JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].