By Jim O’Neal

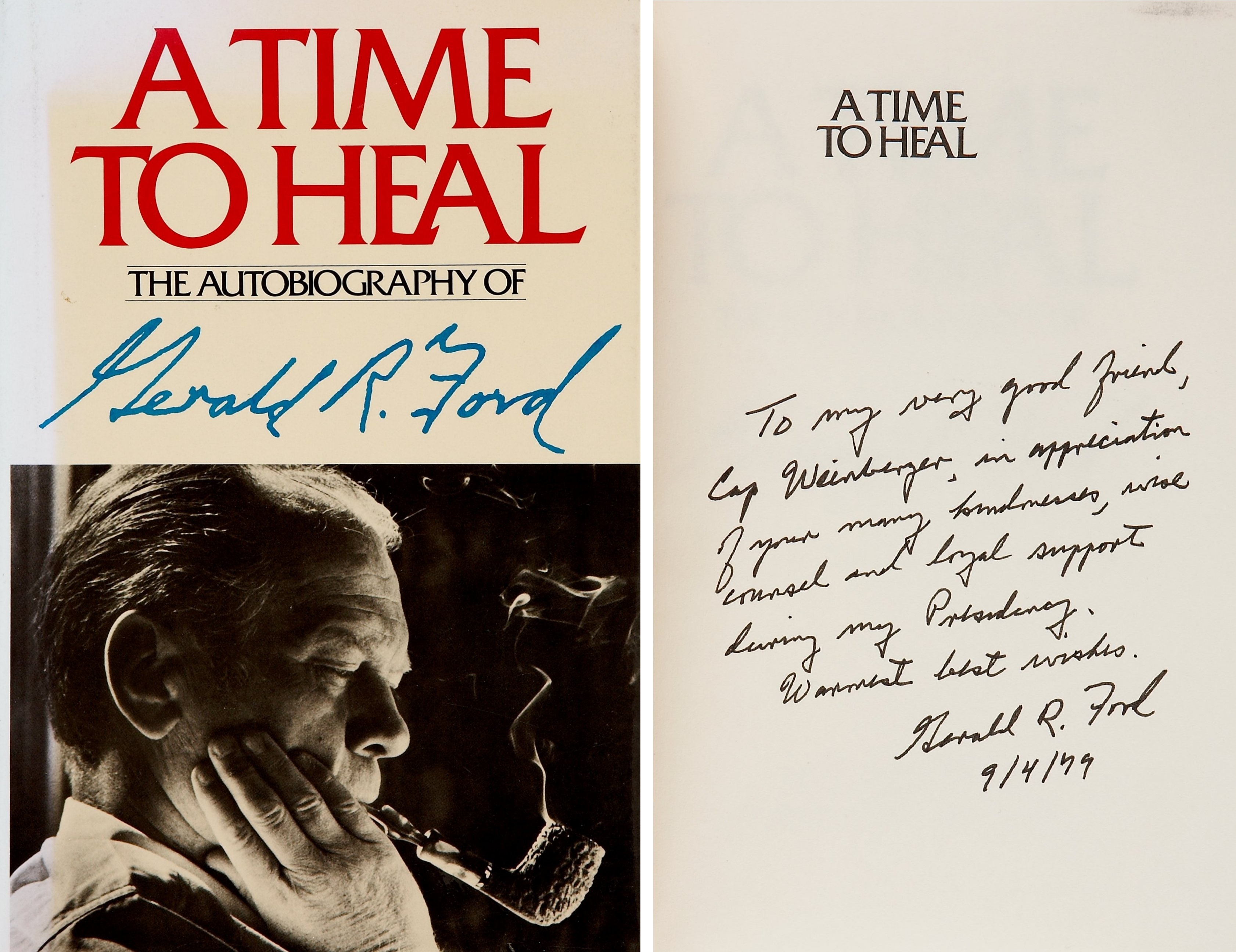

When Vice President Spiro Agnew resigned in 1973, accepting conviction for income tax violations in lieu of facing trial on bribery charges, the door to the White House swung open to Gerald R. Ford.

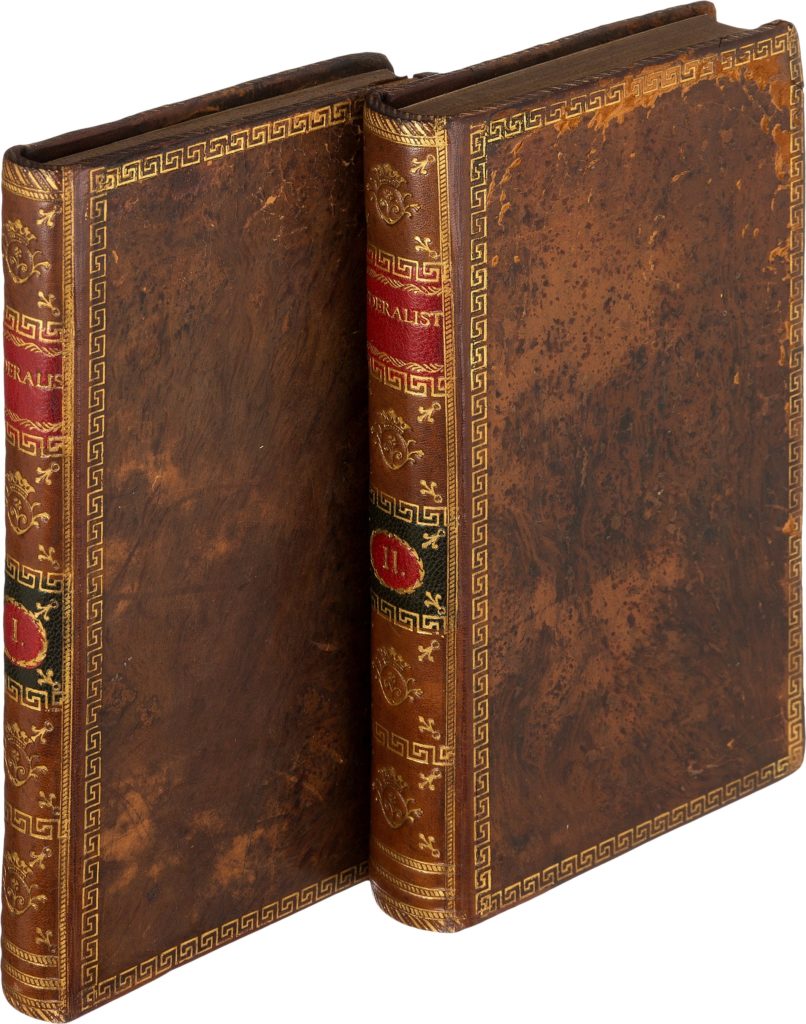

The Constitution’s 25th Amendment, adopted in 1967, came into use for the first time. It provided that a vacancy in the office of vice president could be filled by nomination by the president and confirmation by both houses of Congress. President Richard Nixon, reeling from the twin blows of the Watergate scandals and the Agnew bribery charges, began a frantic scramble to fill the vacancy with someone acceptable to the public and whom Congress would quickly approve. He also needed someone he could trust as unquestionably loyal.

Ford’s nomination was announced by Nixon on Oct. 12, 1973, barely two weeks before the House Judiciary Committee began formal proceedings to determine whether Nixon should be impeached. Nobody in Congress could dig up a smidgen of impropriety regarding Ford and the House approved his nomination 387-35 on Dec. 6 after a Senate vote of 92-3 on Nov. 27. During the hearings, Ford was asked if he would pardon Nixon should he resign and GRF replied, “I do not think the public would stand for it.”

A short but tumultuous eight months later, Ford became the 38th president of the United States in a moment of high drama at noon on Aug. 9, 1974. Shortly before, the nation had been glued to the TV as Nixon became the first president in history to resign. He departed the White House after a tearful farewell to his staff. A few minutes later, the cameras turned to Ford, the first vice president to ascend to the presidency by appointment.

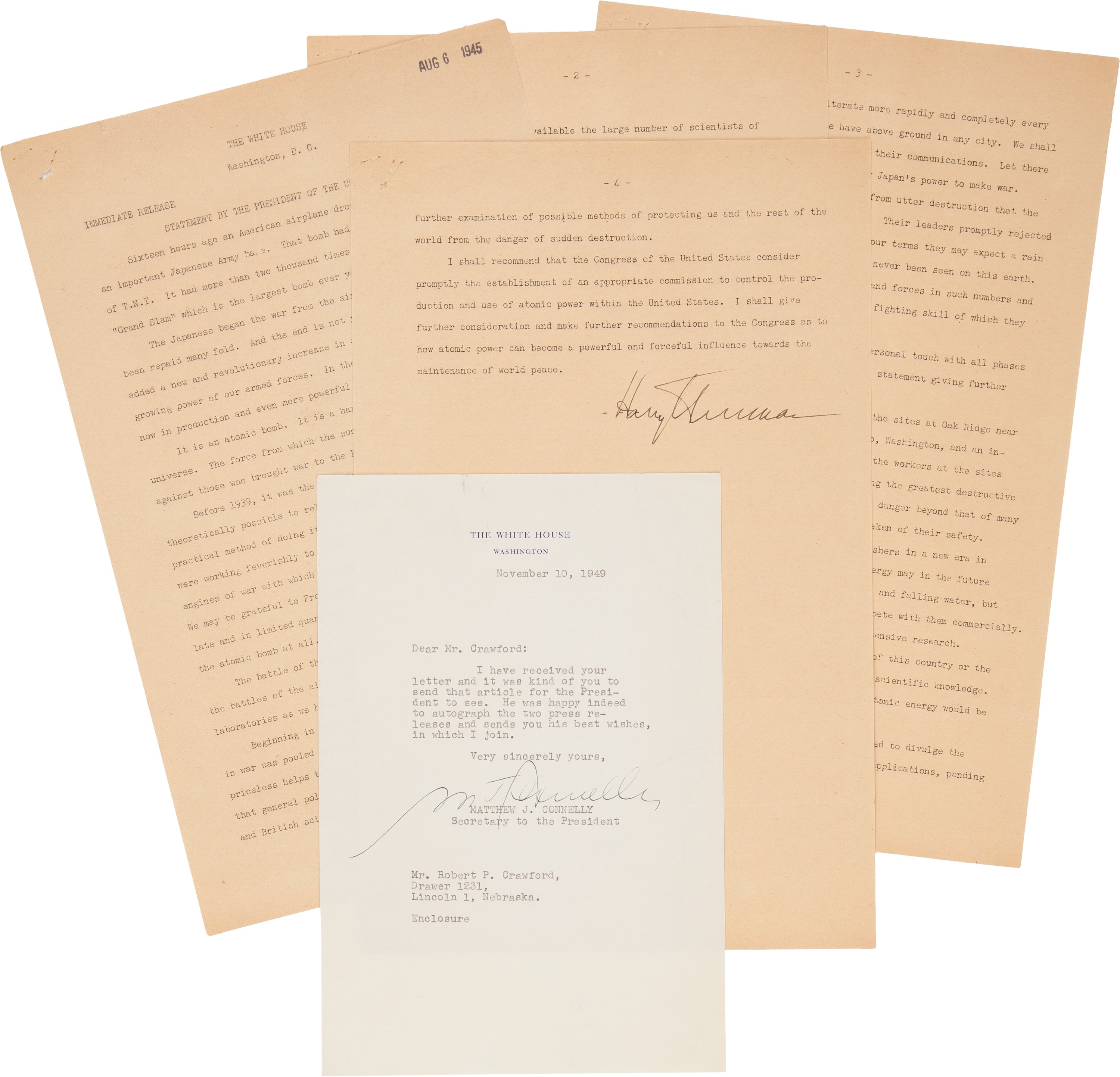

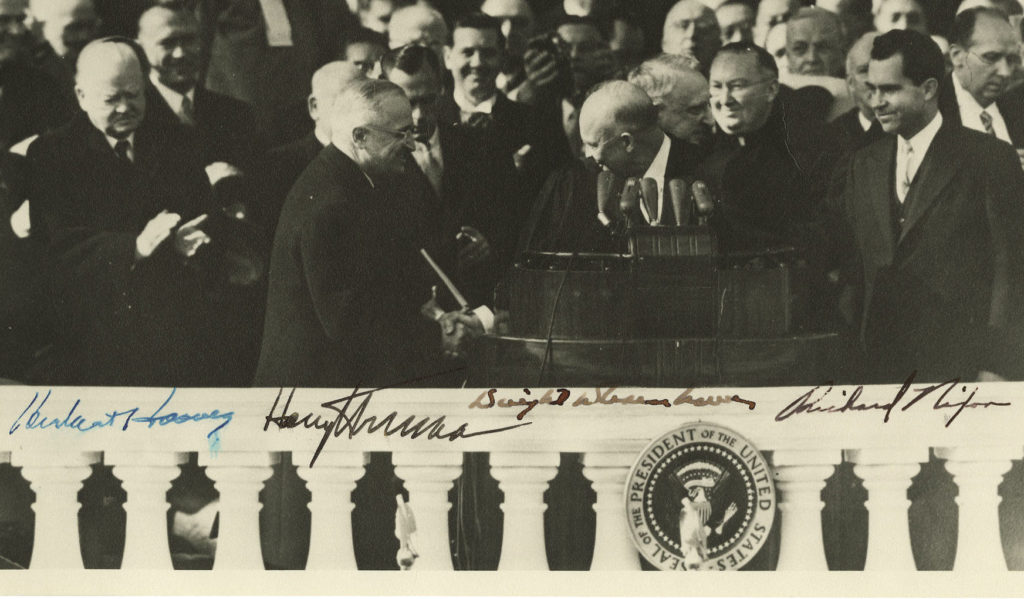

Ford was sworn in on the same East Room platform where Nixon had stood moments earlier, although the White House was not the usual place for a swearing-in ceremony. Rutherford B. Hayes was sworn in before the fireplace in the Red Room, FDR’s fourth term began on the South Portico, and Harry S. Truman had taken the oath in the Cabinet Room. By then, the Nixons were on Air Force One headed for San Clemente. When the clock struck noon, the designation of the plane was dropped.

Within a week of Ford’s swearing in, documents were being hauled out by Nixon staffers in “suitcases and boxes” every day. Working late on Aug. 16, Benton Becker, Ford’s legal counsel, observed a number of military trucks lined up on West Executive Avenue, between the West Wing and the Executive Office Building. Upon inquiry, he was told they were there “to load material that was to be airlifted from Andrews Air Force Base to San Clemente.” Sensing something was wrong, Becker got the Secret Service to intervene and the trucks were unloaded and the material returned to the EOB. Soon, an armed guard was stationed there to protect them.

Perhaps one last ploy by Tricky Dick, à la the 17 minutes of recording “accidentally” erased. We will never know. But we do know that the Ford-Nixon pardon that caused such a national outrage has finally been judged the prudent thing to do … finally.

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is President and CEO of Frito-Lay International [retired] and earlier served as Chairman and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is President and CEO of Frito-Lay International [retired] and earlier served as Chairman and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].