By Jim O’Neal

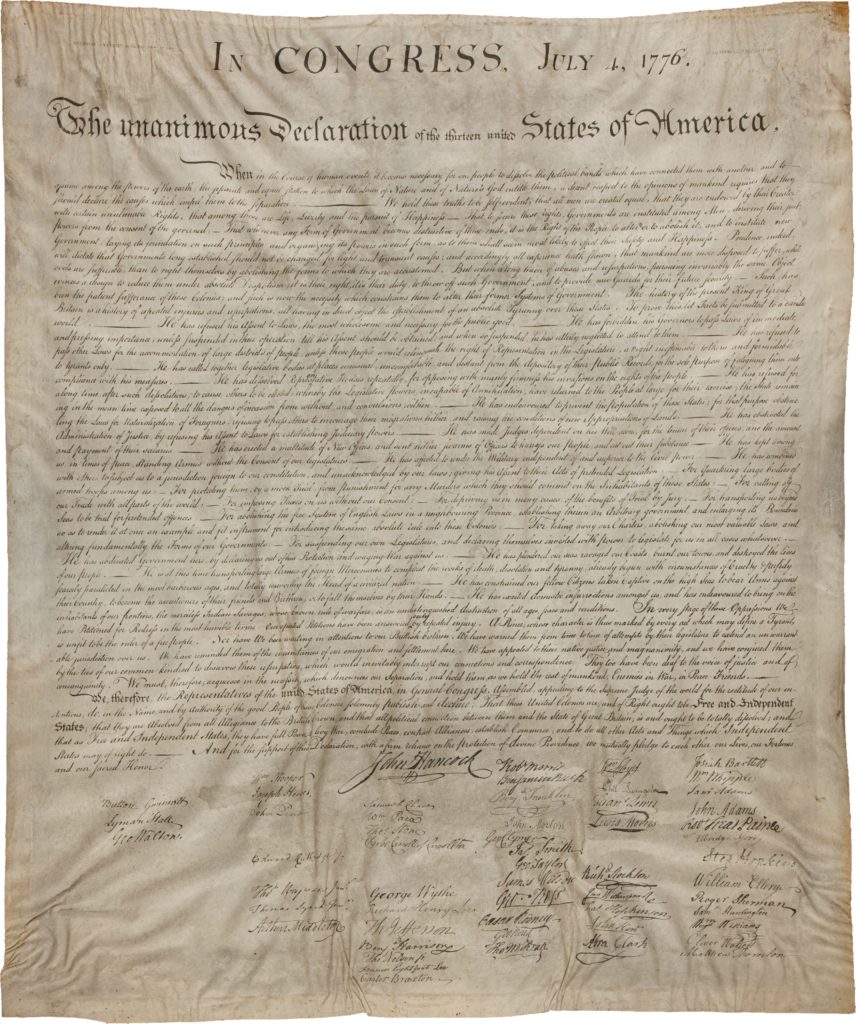

The Supreme Court was created in 1789 by Article III of the U.S. Constitution, which stipulates “the judicial power of the United States shall be vested in one Supreme Court.” Congress organized it with the Judiciary Act of 1789.

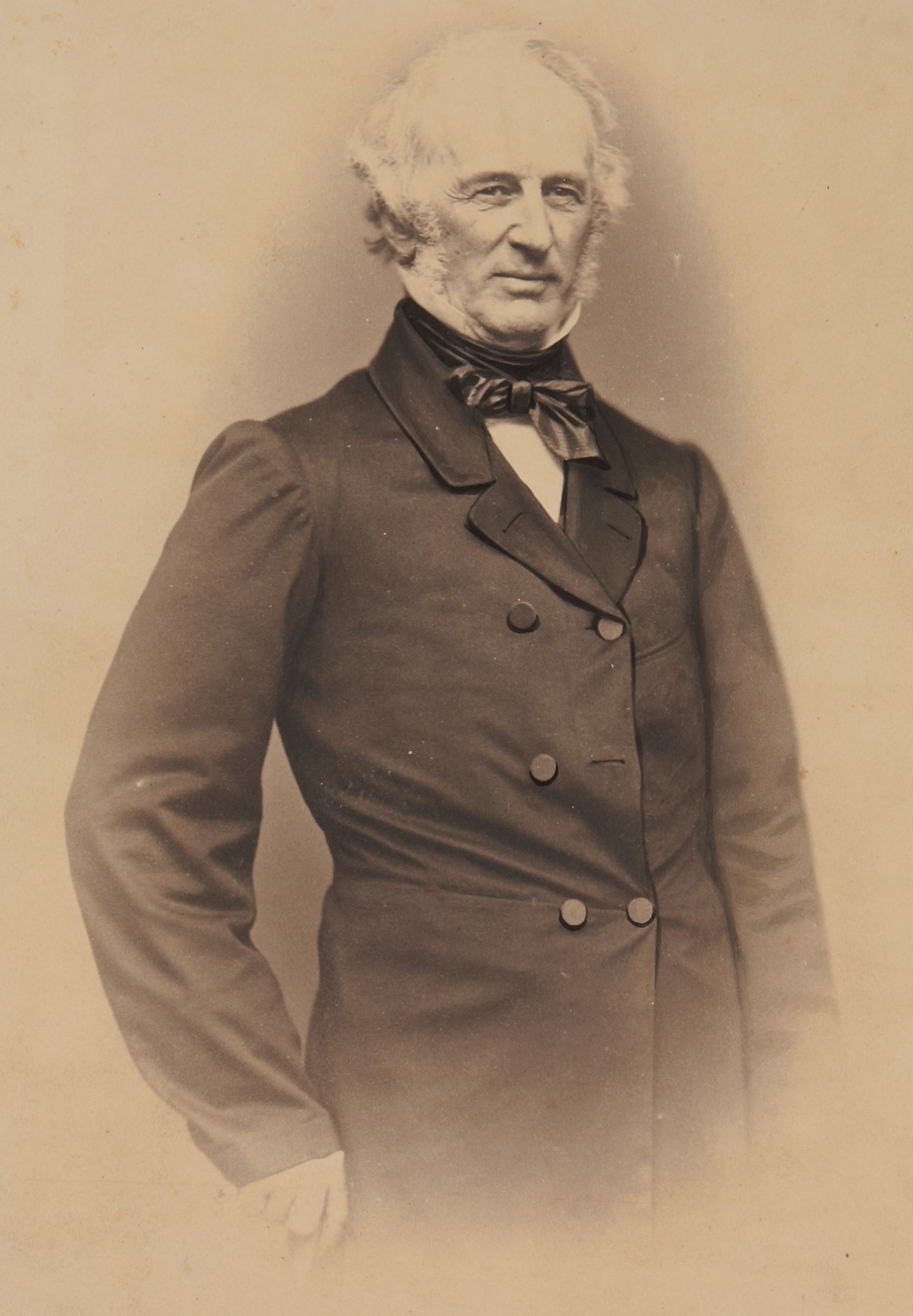

John Jay of New York, one of the Founding Fathers, was the first Chief Justice of the United States (1789–95). Earlier, he was president of the Continental Congress (1778-79) and worked to ratify the U.S. Constitution by writing five of the Federalist Papers. Alexander Hamilton and James Madison wrote the other 85-plus essays, which were published in two volumes called “The Federalist” (“The Federalist Papers” title emerged in the 20th century).

Nearly 175 years later, in 1962, President John F. Kennedy nominated Byron Raymond “Whizzer” White to replace Associate Justice Charles Whittaker, who became chief legal counsel to General Motors (presumably with a nice salary increase). Whittaker had been the first person to serve as judge at all three levels: Federal District Court, Federal Court of Appeals, and the U.S. Supreme Court (a distinction matched by Associate Justice Sonia Sotomayor).

White was the 1960 Colorado state chair for JFK’s 1960 presidential campaign and had met both the future president and his father Joe while attending Oxford University on a Rhodes Scholarship in London when Joe Kennedy was ambassador to the Court of St James. This was after White had graduated from Colorado University Phi Beta Kappa, where he was also a terrific athlete, playing basketball, baseball and finishing runner-up for the Heisman Trophy. He is unquestionably the finest athlete to serve on the Supreme Court.

He continued mixing scholarship and athletics at Yale Law School, where he graduated No. 1 in his class magna cum laude and played three years in the National Football League for the Pittsburg Pirates (now the Steelers). He was elected to the College Football Hall of Fame in 1954.

Judge White was in the minority on the now-famous Roe v. Wade landmark decision on Jan. 22, 1973. Coincidentally, there was a companion case that has been virtually forgotten called Doe v. Bolton (Mary Doe v. Arthur K. Bolton, Attorney General of Georgia, et al.) that was decided on exactly the same day and on the identical issue (overturning the abortion law of Georgia). White was in the minority here, too.

White’s nomination was confirmed by a simple voice vote (i.e. by acclamation). He was the first person from Colorado to serve on the Supreme Court and it appears that one of his law clerks … Judge Neil Gorsuch, also from Colorado … most likely will become the second, although it is unlikely he will receive many Democratic votes, much less a voice vote.

Times have certainly changed in judicial politics and, unfortunately, for the worse … sadly. Advise and Consent has morphed into a “just say no” attitude and we have lost something sacred in the process.

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chairman and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chairman and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].