By Jim O’Neal

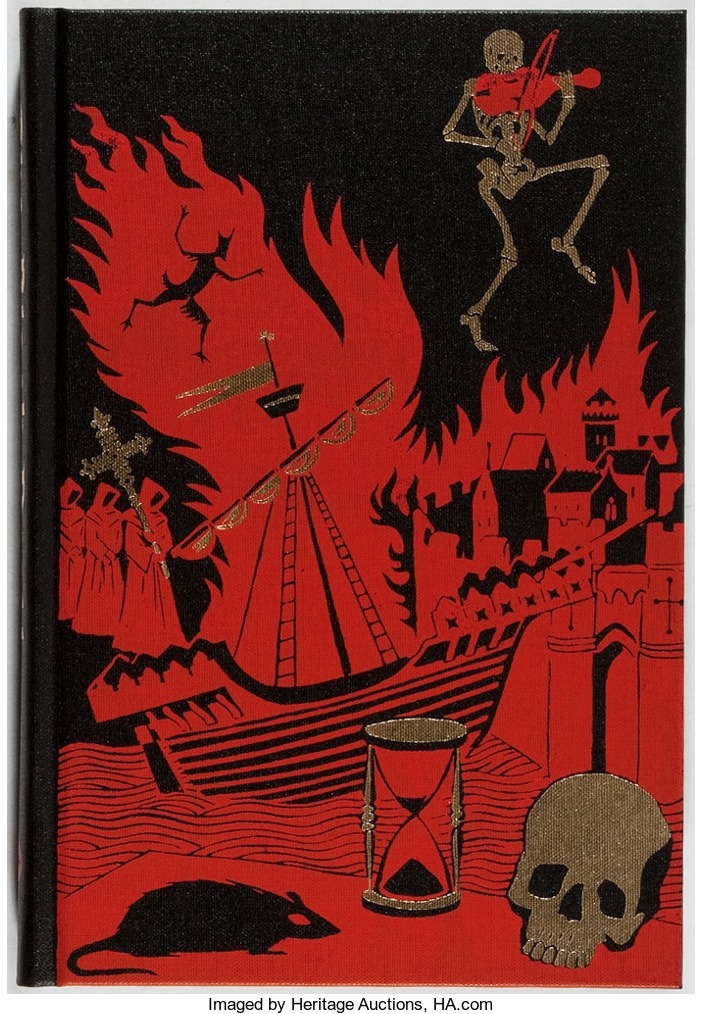

Thomas Farynor, baker for England’s King Charles II, usually doused the fires in his oven before going to bed. But, on Saturday, Sept. 1, 1666, he forgot and at 2 a.m. was awakened by fire engulfing his house.

Farynor lived on Pudding Lane near Thames Street, a busy thoroughfare lined with warehouses that ran along the river wharves. It was typical of London streets … very narrow and crammed with houses made of timber.

As the flames spread and people awoke and started scrambling to escape, nearby Fish Street Hill exploded into fire as piles of straw were ignited.

Samuel Pepys climbed to the top of the Tower of London to get a better view. At 7 a.m., he described how an east wind suddenly turned into a gale and whipped the fire into a raging conflagration. The Great Fire of London was out of control.

As early as 1664, writer John Evelyn had warned of the danger of such an event due to so many open fires and furnaces in such a “wooden … and inartificial congestion of houses on either side that seemed to lean over and touch each other.” Everyone was too busy to worry about it.

There were fire engines for emergencies, but they were rudimentary and privately owned. There was no official London fire brigade. In the chaos, any pumps that did get into service were hampered by large crowds clogging the streets dragging furniture in a vain attempt to salvage valuables.

The other strategy was fire breaks, which consisted of pulling down buildings with huge iron hooks and quickly clearing the debris to create barren areas. However, the fire was moving so quickly that it blazed through the debris before it could be cleared.

Back on the Tower of London, Pepys observed “an infinite great fire headed right at London Bridge.”

London Bridge spanned the Thames River and was an extraordinary structure … lined with homes and shops separated by a passageway only a few yards wide. The fire attacked the bridge greedily, leaping from rooftop to rooftop as people frantically fled.

By Sunday evening, boats carrying people swarmed across the river where onlookers lined the shore mesmerized by the enormous blaze.

On Monday, a powerful wind drove the fire through London. Houses, churches and buildings were all consumed as the blaze continued to rage. An East India warehouse full of spices blew up and the smoke carried the smell of incense across the city.

Finally, by Wednesday, the wind subsided and 200,000 Londoners looked in astonishment at their great city, now turned to ash … 13,000 houses, 87 churches, St Paul’s Cathedral, the Royal Exchange, Customs House, all city prisons and the Great Post Office were all destroyed.

The mystic Anthony Wood said, “All astrologers did use to say Rome would have an end and the Antichrist come, 1666, but the prophecie fell on London.”

All because a baker forgot to put out his oven.

We all know what Smokey the Bear would say.

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is President and CEO of Frito-Lay International [retired] and earlier served as Chairman and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is President and CEO of Frito-Lay International [retired] and earlier served as Chairman and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].