By Jim O’Neal

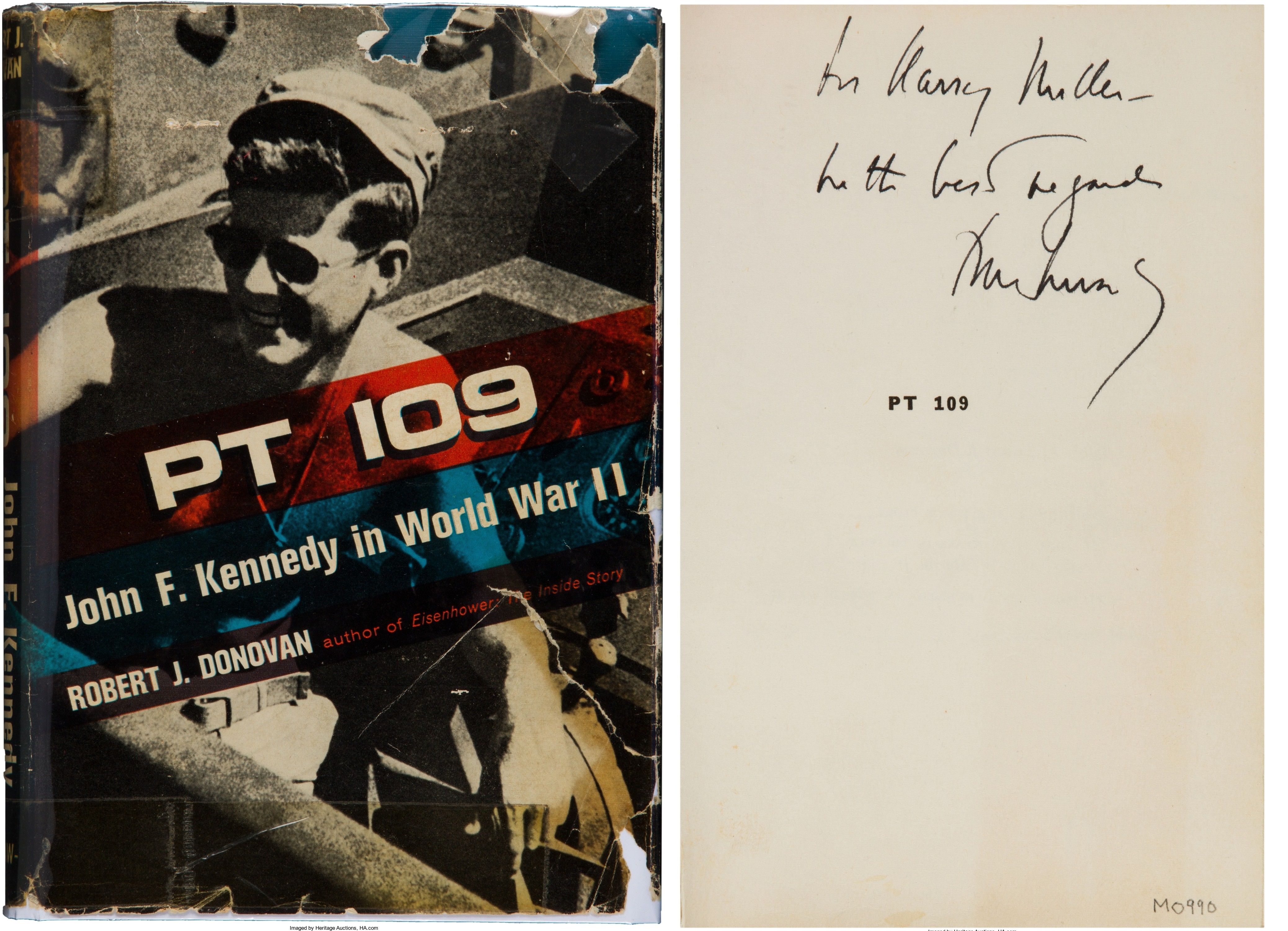

When war broke out in 1939, all the Rhodes Scholars in England were sent home and this included Byron “Whizzer” White. He went back to Yale and graduated from its law school with honors. Then, in 1942, he enlisted in the Navy, as so many others did. He was serving in the Solomon Islands as PT boat squadron skipper and intelligence officer when John F. Kennedy was a PT boat officer. It was White who personally wrote the official account of the battle events that were later portrayed in the book and movie PT 109.

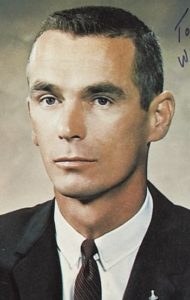

Flash forward 20 years and there was a famous photo of a smiling Kennedy, now president of the United States, pointing at the front-page headline of the New York Herald Tribune – “WHIZZER WHITE TO SUPREME COURT – LAWYER, NAVAL OFFICER, FOOTBALL STAR.” It was JFK’s first appointment to the Supreme Court.

In August 1962, President Kennedy got a second bite at the same apple. Justice Felix Frankfurter, once styled as “the most important single figure in our whole judicial system,” bowed to the effects of a stroke and announced his retirement. The president acceded to his request and called a press conference to announce he had chosen Secretary of Labor Arthur Goldberg as the replacement.

This was not a great surprise, since the 54-year-old labor expert was well-qualified and eager to join the court. The only slight reluctance was his close personal relationship to the president and the loss of a highly valued cabinet position. However, both Chief Justice Earl Warren and Frankfurter himself supported the decision and it was made.

The nation’s reaction was universally favorable and the Senate Judiciary Committee was in total agreement. Goldberg was confirmed by the full Senate, with only Senator Strom Thurmond recording his opposition. Thus the new justice was able to take his seat on the court in time for the October 1962 term. The world of the judiciary was at peace, even after the tragic events in Dallas in November 1963 and the Warren Commission investigation that followed.

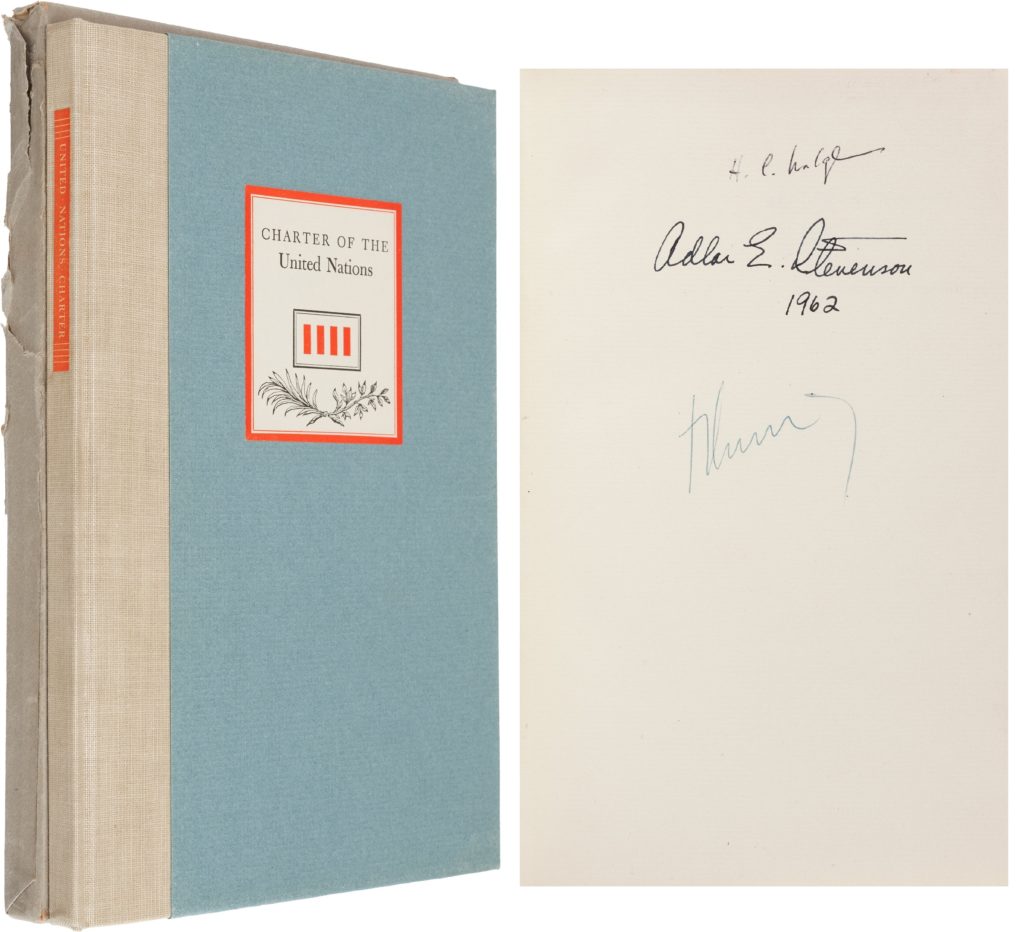

However, after a mere three years on the Supreme Court, President Lyndon B. Johnson decided that Justice Goldberg should resign from the court and become ambassador to the United Nations, succeeding Adlai Stevenson. It now seems clear that LBJ’s motive was the naive hope that someway Goldberg might be able to negotiate an end to the nightmare in Vietnam. Goldberg was strongly opposed to the move, but as he explained to a confidant, “Have you ever had your arm twisted by LBJ?”

Supposedly, there was also a clearly implied understanding of an ultimate return to the court, which obviously never materialized. Neither did an LBJ suggestion that Goldberg might be a candidate for the 1968 vice-president slot – another false hope that was mooted by LBJ’s decision not to seek reelection.

Lost in all of this was the fact that Goldberg’s intended replacement on the court, Abe Fortas, had repeatedly declined LBJ’s offers to be a Supreme Court justice. In fact, poor Abe Fortas never said yes. The president simply invited him to the Oval Office and informed him that he was about to go to the East Wing “to announce his nomination to the Supreme Court” and that he could stay in the office or accompany him.

Fortas decided to accompany the president, but to the assembled reporters he appeared only slightly less disenchanted than the grim-faced Goldberg, with his tearful wife and son by his side. Goldberg had reluctantly agreed to become ambassador to the United Nations and commented to the assembled group, “I shall not, Mr. President, conceal the pain with which I leave the court.”

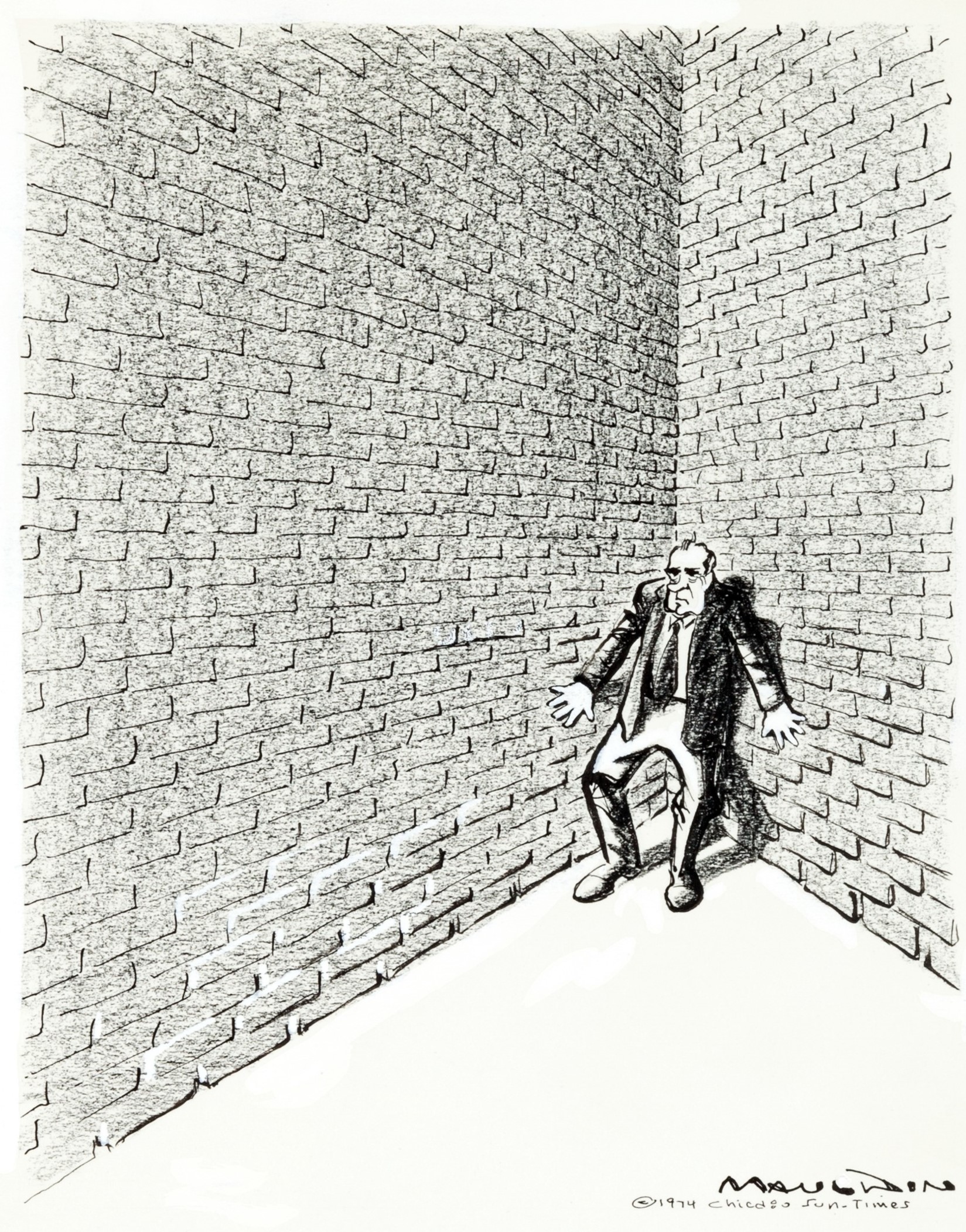

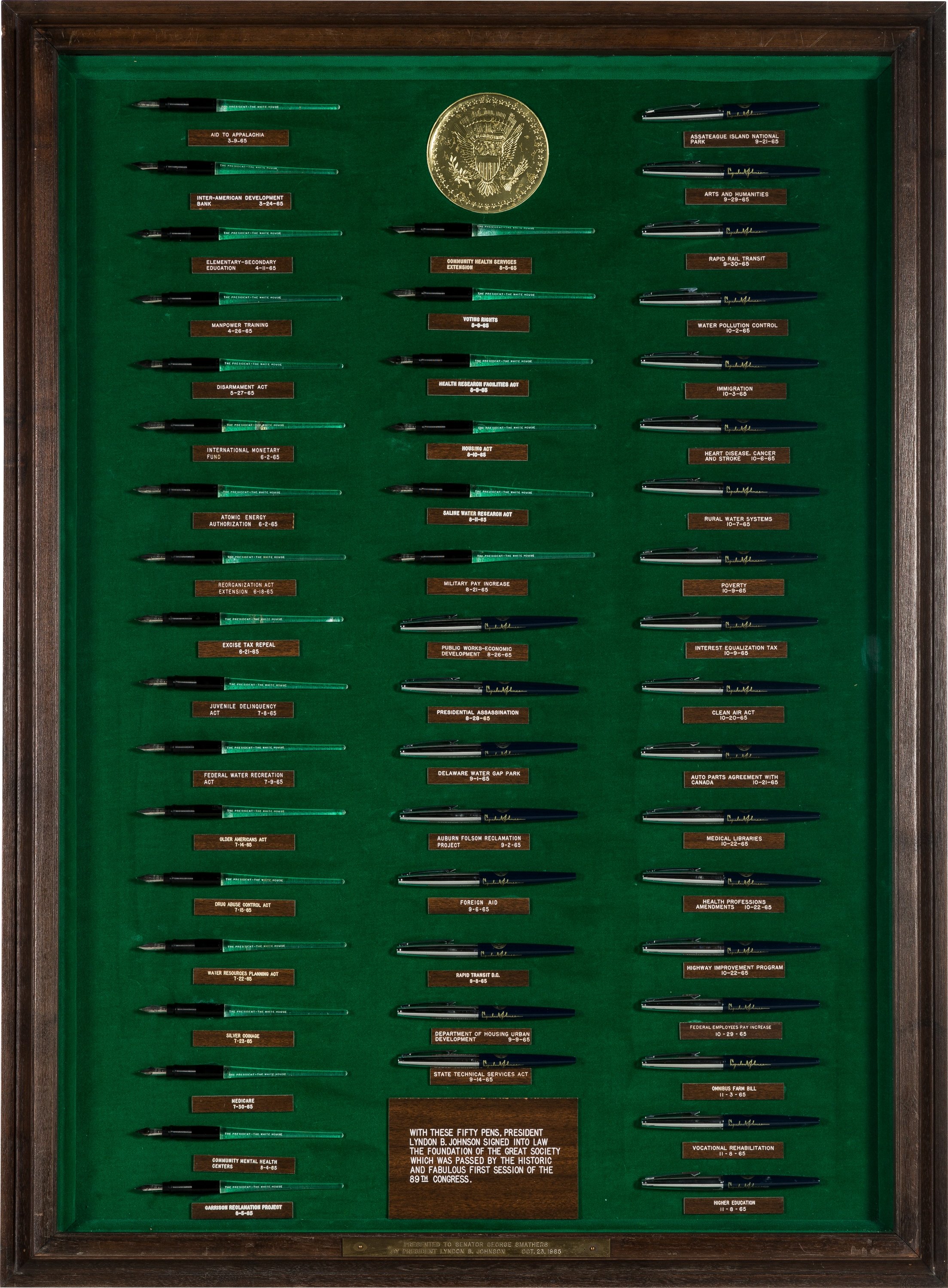

It was a veritable funereal ceremony – except for a broadly smiling LBJ, who had once again worked his will on others, irrespective of their feelings. The man certainly did know how to twist arms – and I suspect necks and other body parts – until he achieved his objectives.

He was sooo good at domestic politics, it seems sad he had to also deal with foreign affairs, where a different skill set was needed.

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chairman and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chairman and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].