By Jim O’Neal

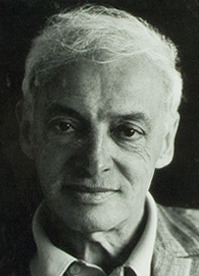

Saul Bellow was a Canadian-born writer who became a nationalized U.S. citizen when he discovered he had immigrated to the United States illegally as a child. He hit the big time in 1964 with his novel Herzog. It won the U.S. National Book Award for fiction. Time magazine named it one of the 100 best novels in the English language since “the beginning of Time” (March 3, 1923).

Along the way, Bellow (1915-2005) also managed to squeeze in a Pulitzer Prize, the Nobel Prize for Literature, and the National Medal of Arts. He is the only writer to win the National Book Award for Fiction three times.

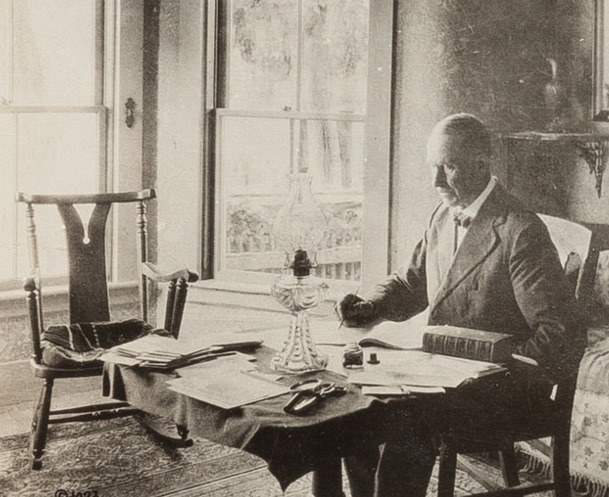

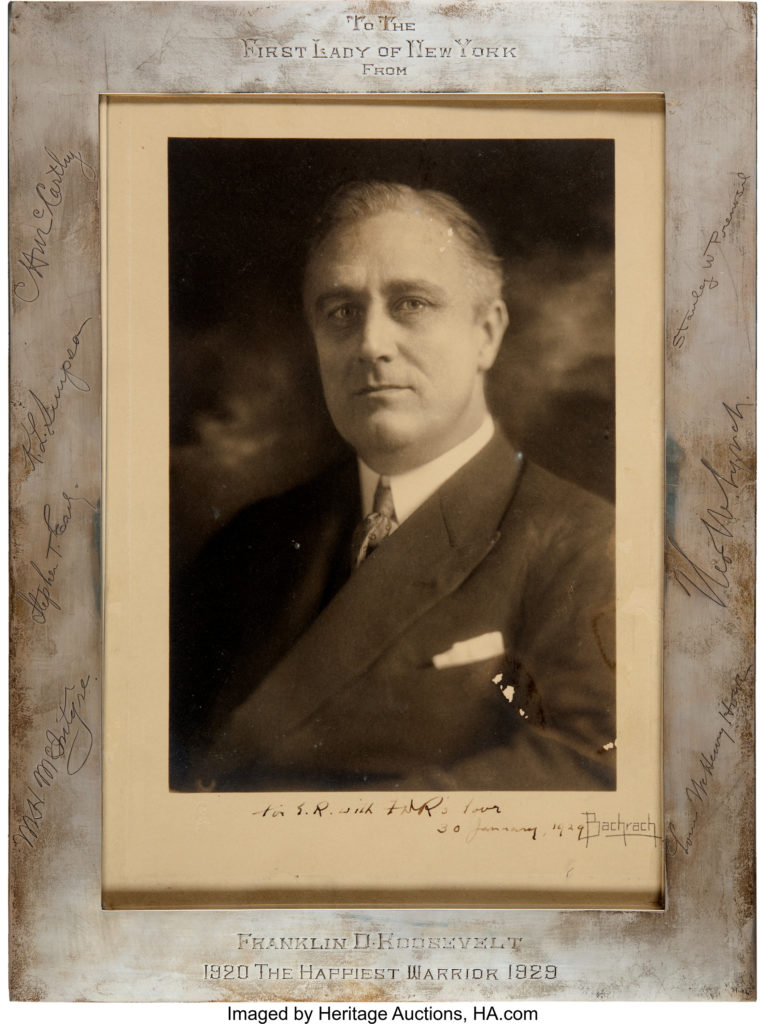

Bellow loved to describe his personal experience listening to President Roosevelt, an American aristocrat (Groton and Harvard educated), hold the nation together, using only a radio and the power of his personality. “I can recall walking eastward on the Chicago Midway … drivers had pulled over, parking bumper to bumper, and turned on their radios to hear every single word. They had rolled down the windows and opened the car doors. Everywhere the same voice, its odd Eastern accent, which in anyone else would have irritated Midwesterners. You could follow without missing a single word as you strolled by. You felt joined to these unknown drivers, men and women smoking their cigarettes in silence, not so much considering the president’s words as affirming the rightness of his tone and taking assurances from it.”

The nation needed the assurance of those fireside chats, the first of which was delivered on March 12, 1933. Between a quarter and a third of the workforce was unemployed. It was the nadir of the Great Depression.

The “fireside” was figurative; most of the chats emanated from a small, cramped room in the White House basement. Secretary of Labor Frances Perkins described the change that would come over the president just before the broadcasts. “His face would smile and light up as though he were actually sitting on the front porch or in the parlor with them. People felt this, and it bound them to him in affection.”

Roosevelt’s fireside chats and, indeed, all of his efforts to communicate contrasted with those of another master of the airwaves, Adolf Hitler, who fueled rage in the German people via radio and encouraged their need to blame, while FDR reasoned with and encouraged America. Hitler’s speeches were pumped through cheap plastic radios manufactured expressly to ensure complete penetration of the German consciousness. The appropriation of this new medium by FDR for reason and common sense was one of the great triumphs of American democracy.

Herr Hitler ended up committing suicide after ordering the building burned to the ground to prevent the Allies from retrieving any of his remains. So ended the grand 1,000-year Reich he had promised … poof … gone with the wind.

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].

Intelligent Collector blogger JIM O’NEAL is an avid collector and history buff. He is president and CEO of Frito-Lay International [retired] and earlier served as chair and CEO of PepsiCo Restaurants International [KFC Pizza Hut and Taco Bell].